Return to Resources

Contact Monitoring Ingestion API

Jul 27, 2020

10 min read

We've spent several iterations figuring out how to solve the problem of determining two devices that are within 5 meters of each another for a minimum duration of 5 minutes. That's how we define a "contact" event. This is the thought process and the challenges we faced when designing the system.

Problem

Input into the system: Spatial and temporal data of devices that indicates the position of a device, accuracy radius of the position in meters, timestamp when the position was recorded.

For example:

{ "device_id": "ABC", "venue_id": "XYZ", "timestamp": 1587696631000, "position": { "latitude": 45.0, "longitude": 120.0, "floor": 4, "accuracy": 2 }}Query: Given a device id, a timed window represented by a start and end date time, and a proximity in meters (5m in requirement).

Output: A list of devices along with their spatial and temporal data that were deemed to have contacted, ie. come within the proximity of, while taking the accuracy radius into account, the queried device, for 5 minutes or more.

Problem Breakdown

Since we want to be able to show how many contacts each device/person has, this pretty much requires that the proximity relationships between everyone and everyone else be aggregated at run time.

The problem can then possibly be broken down into 2 parts.

Normalizing each piece of position by time and space, since

the location data is not collected at exactly the same time between all devices, we can only determine contact by loosely matching on fixed time window

reported position is not guaranteed to be always accurate, some can be discard if they have too low of accuracy

The purpose is to return a list of contact relationships (who is in proximity of whom in a given time window) between two devices.

Given the list of contact relationships between any two devices in each time window, track and aggregate them, while keeping track of the duration of the aggregation.

Approaches

Due to potentially significantly large number of position data being ingested (1000 employees at a venue each updating location once a minute = 1000 * 60 * 24 = 1.44 million position records a day), it is not realistic for the system to query such a large dataset directly. We’d have to aggregate this data first.

Universal Grid Approach

First from the spatial perspective, model the world as a large map and create a grid using WGS84 coordinates. At the equator, 0.00001 degrees is approximately 1.1m in length, so we can divide the world into approximately 1m^2 sections.

Then each position data’s coordinates are truncated to 5 decimal places, and stored under the corresponding “section”. When there are position data for multiple devices stored within the same section, we can loosely say that they are within 1 meter proximity of each other.

Taking the accuracy radius and the contact proximity parameter into account, the system can increase the contact zone around the original coordinates by expanding surrounding grid sections, 1 meter per section each direction, and when two contact zones overlap, by the same logic, the overlapping parts would be represented by having position data from two devices sharing the same grid sections.

Illustration showing how a device is within 5 meter proximity of another device, by marking their positions on a grid map, where the red dots represent the infected device’s position with a 2 meter accuracy, the yellow dots (should fill up the rest of the space within the red circle) represent a 5 meter radius proximity around the infected but are still duplicates of the red dots, and the blue dots represent the position a nearby device with a 3 meter accuracy that is potentially within the infected device’s exposure zone.

With this understanding, when position data for a device is ingested, the same position data can be duplicated to cover the whole accuracy and proximity radius and persisted in the data store indexed by the respective coordinates truncated to the 5th decimal place. And a “contact event” is derived when two position data occupy the same position.

While the above approach illustrates the idea, it will blindly apply the 5 meter proximity radius to all position data ingested and can create false positives when two devices are in fact 10 meters apart. Therefore the proximity value should be decreased to 2.5 meters (or 3m, since the grid unit is 1m) so that the max proximity is still 5 meters.

So far with this approach we are able to deduce the spatial side of the problem, ie. given the position of a device of someone reported as infected (the red and pink dots), what other devices are nearby in proximity (dots also shared by other devices).

Problem:

Sheer number of dots to update for each position event.

The distance of 0.00001 degrees decreases in latitude the further we're away from the equator (at 45 degrees N it is 0.78m), so accounting for that will add more complexity.

Rounding 2.5m to 3m for proximity effectively makes it a 6m proximity.

MongoDB/Postgres (PostGIS) Geospatial Queries

Another potential way is to use MongoDB’s or Postgres with the PostGIS extension geospatial indexed queries. The position events could be stored as circles/polygons and then the DB could be queried to return other polygons that are within the proximity radius.

Problem: It is a lot of coordinates to store. For a multi tenant system with millions of events per tenant per day, long term scalability is a concern.

Pre-calculated Bounding Box and Filter

Picture illustrating the approach described, where the red dot is the position event in question, orange dots are the position events within proximity and the green are ones queried but out of proximity.

We know that the maximum distance when two points can be considered “in proximity” is

Therefore if we can query for all the points within this (acc + 9m) radius it should guarantee to contain at least all the points in proximity. From there all we need to do is filter out the ones that are actually not in proximity, ie. after subtracting accuracy values from both points, the distance is greater than 5m (distance - acc1 - acc2 > 5m).

The advantage of this is that we only need to store points, and two options to query this data.

Mongo/Postgres geospatial queries: Less data, computation is done on DB side, we’re unsure about performance when data scales up in a multi-tenant centralized system. But for a system that contains only a single tenant this should be sufficient.

Cassandra/Scylla: It doesn’t support geospatial queries, so we can’t really query for everything within a circular radius, but querying for a bounding box is a lot simpler, it would require us pre-calculating the min and max lat/lon values of the bounding box and then it’s simply a matter of querying for everything within a lat/lon range. There will likely be more points returned due to a larger search area, but the extra computation in calculating distance is likely going to be insignificant. Due to the nature of the data (volume and throughput), Cassandra or Scylla DB makes a lot of sense since we’d only be inserting and reading, not updating.

Contact Event Aggregation

To factor in the temporal aspect, since it is unlikely that two position data will be created at the exact same time, there will also need to be a time threshold for contact. To simplify the problem, the timestamp of each position data can be truncated down to the minute and all data within the minute is then bucketed to the same minute bucket.

Combined with one of the above approaches to associate spatial relationships, we can identify a contact in a particular minute time slice. A naive algorithm to aggregate each one minute contact event is to store each contact event at time T, starting with duration of 1 minute, but before inserting, check if there’s a contact event that ends with T-1min as well as event starting at T+1min, and if so, merge the events, increment the duration and delete the obsolete events.

Risks and downsides:

To alter the proximity value from 5m would require all position data to be reprocessed.

This assumes position data is updated at least once a minute.

Accuracy of the position data - we will need to discard data with low accuracy (high accuracy radius), and this may result in data disjointed in time ( {

<}5 minute sequence)Proximity zones do not consider walls.

Architecture diagrams

Initial backend architecture

Initially for the backend, everything will be done within one service.

The device sends position updates to the ingestion API in batches by HTTP POST.

Server discards position events with accuracy larger than 4 meters, inserts position events to the database. There can only be one position event per device per minute, anything more than one will also be discarded.

The server queries the database for position events from other devices within that minute with latlon within a bounding box that surrounds a proximity radius (accuracy+5m proximity+4m max accuracy) defined by the bottom left (lower limit) and upper right (upper limit) coordinates.

Filter the position events that are too far.

For each “position event” to “position event within proximity” pair, create a minute aggregate, and create a contact event of one minute. Look up immediate contact events prior and after and merge and extend as necessary.

The dashboard backend retrieves the contact events with duration at least 5 minutes.

Longer term

gRPC is more efficient than traditional HTTP methods

HTTP/2: one long lived connection between client and server

Protocol buffers (binary data) is lightweight compared to JSON

Kafka for position events for asynchronous throughput.

Kafka for minute aggregates. Concurrent inserts of contact events between two devices could lead to single minute events that are unmerged. This can be alleviated by making sure only the events for any two devices are handled by only one process with proper partitioning in kafka.

Due to time constraint we actually only used MongoDB for the short term solution due to ease of getting started. And due to imitation of data partitioning in the server code for proof of concept, this means the server code can only be run in a single instance.

Load testing

In order to test our architecture for performance and scale, we developed a fake data generator that can simulate devices continuously send data to the ingest api for processing. We simulated 1000 devices sending their position every 15 seconds to load test our initial architecture. We were able to process the data in realtime without any issues. We then built a simple visualizer that inspects the results of the processed data.

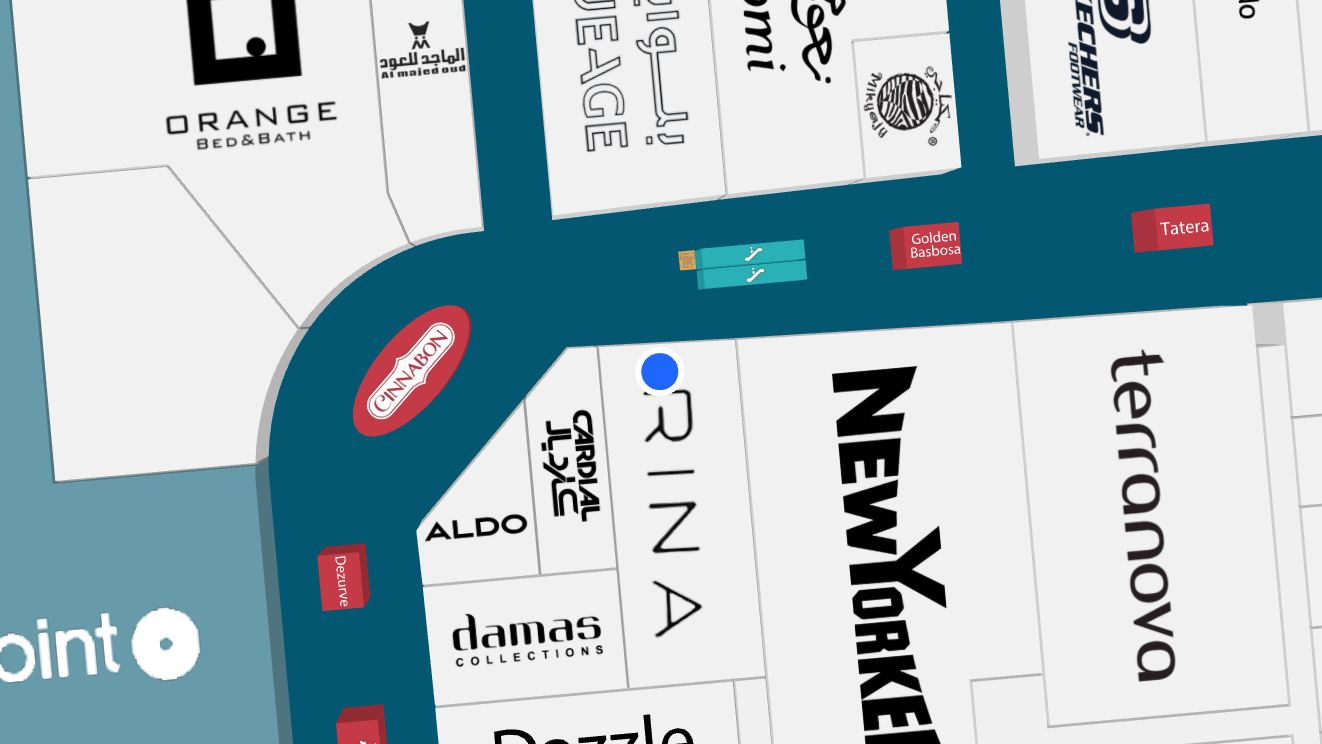

The circles represent a position sent by the device. The position is never a dot since every position data returned by mobile devices has an accuracy. The size of the circle represents the accuracy of the position. A red line between two circles indicates that the system has determined a contact event has happened.